AI Agents Are Moving From “Helpful” To “In Charge”

Plus: If you can’t explain what the bot is allowed to do, your customers will explain it for you. Loudly.

To opt-out of receiving DCX AI Today go here and select Decoding Customer Experience in your subscriptions list.

📅 February 11, 2026 | ⏱️ 4-min read

Good Morning!

We keep talking about AI like it’s a smarter intern. But this issue is about something else: a new coworker who can click buttons in your systems. Oracle is embedding role-based agents right where quotes get built, cases get moved, and customers either feel taken care of or get bounced around like a pinball. And once AI can act, your biggest CX problem is no longer “How fast can we automate?” It’s “How do we keep trust intact when automation moves faster than human judgment?”

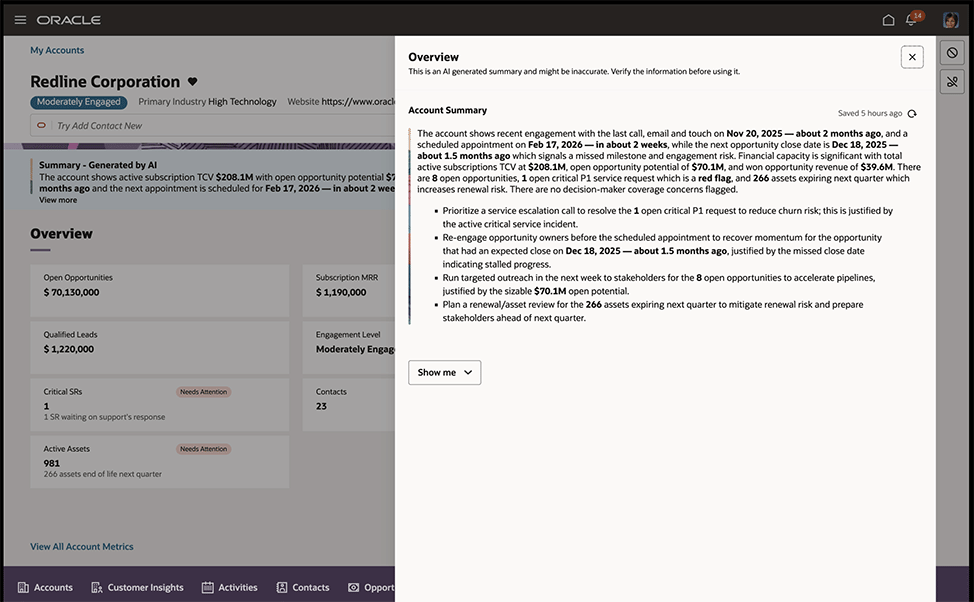

🧠 THE DEEP DIVE: Oracle Embeds Role-Based AI Agents Across Sales, Marketing, and Service

The Big Picture: Oracle announced new role-based AI agents built into Oracle Fusion Cloud workflows to automate common CX tasks across marketing, sales, and service.

What’s happening:

Oracle is positioning these as embedded, role-specific agents, not standalone chatbots. Think agents that live inside the work where reps already click, route, quote, and resolve.

The pitch is workflow automation plus predictive help: analyze unified data, automate steps, and surface next-best actions inside the suite.

The strategic move is about standardizing “agentic” work inside an enterprise stack, where identity, permissions, and data access are already defined.

Why it matters: If these agents actually reduce “swivel chair” work (copying between systems, summarizing cases, assembling quotes), you’ll see faster resolution and cleaner handoffs. But the bigger CX implication is governance: when an AI can take action inside your systems, you need crystal-clear guardrails on what it can do, what it must ask approval for, and how it explains decisions to humans.

The takeaway: Treat “embedded agents” like a new employee class. Define roles, permissions, escalation rules, and quality metrics before you roll out widely. If you can’t audit an action trail, you can’t defend it to customers or regulators.

Source: Oracle

📊 CX BY THE NUMBERS: Banking Hits The AI Tipping Point, But Spending Is About To Get Serious

Data Source: Finastra Research

Only 2% of financial institutions reported no AI use. Translation: “AI in the business” is no longer a differentiator, it’s table stakes.

42% of U.S. institutions plan to increase AI investment by more than 50% in 2026. That’s not experimentation. That’s operational commitment.

Finastra also highlights a push-pull reality: customer expectations + modernization are accelerating adoption, while security investment is expected to rise sharply in 2026.

The Insight: CX leaders in financial services are about to enter the “prove it” phase. When AI investment jumps this hard, boards start asking what improved: containment, speed to resolution, fraud loss reduction, NPS, complaints, regulatory exposure. If your AI program can’t show measurable movement, it becomes a cost-cutting story by default.

🧰 THE AI TOOLBOX: SoftBank’s “SoftVoice” Style Tech That Calms Abusive Calls In Real Time

The Tool: A real-time voice conversion approach that changes the tone of an aggressive caller into something calmer without changing the literal meaning.

What it does: It processes audio live and “de-escalates” how the customer sounds to the agent. The goal is to reduce harassment, lower stress, and keep agents functional during hostile interactions.

CX Use Case:

Protect agent performance during abuse-heavy queues: If your team handles billing disputes, cancellations, or collections, you know the emotional tax. This kind of layer can reduce burnout and improve adherence without telling agents to “just be resilient.”

Improve coaching and QA signal: When calls are less emotionally explosive, supervisors can more easily spot process issues (policy confusion, unclear disclosures) instead of getting stuck in conflict management mode.

Trust: This is where you put your ethics and compliance hat on. You’re modifying how a customer is heard. Even if meaning isn’t changed, perception is. If you deploy anything like this, you need: transparency policies (when and whether customers are informed), careful monitoring for unintended bias (whose voices get “softened” more often), and a fail-safe that preserves an original recording for compliance, dispute resolution, and legal needs. Done well, it’s employee experience protection. Done sloppily, it can look like you’re “editing” customers.

Source: Tokyo Weekender

⚡ SPEED ROUND: Quick Hits

Microsoft Flags “Observe, Govern, Secure” As AI Agents Scale Inside Enterprises — A timely reminder that agentic AI without monitoring is just a future incident report.

Contact Center Fraud Jumps As Deepfakes Go Mainstream — Voice fraud is no longer niche, and customer verification flows are now part of your CX strategy.

Congressional Hearing Shows Split On Whether Existing Workplace Laws Cover AI — If you’re using AI for routing, QA, coaching, or performance scoring, expect more scrutiny on fairness and enforcement.

📡 THE SIGNAL: The Moment AI Stops Suggesting And Starts Doing

Today’s stories rhyme. Oracle is putting AI agents inside the flow of work, not as a chatbot side quest. Banking is basically saying, “We’re past pilots,” and now the spending is getting real. Which means the questions get real too: show me containment, show me resolution speed, show me fewer complaints, show me lower risk. Not vibes.

Then you’ve got SoftVoice, and it’s the perfect CX reality check. You might call it employee protection. Someone else might call it “editing the customer.” Both reactions can be true depending on how you deploy it. That’s the point. In 2026, the experience is not just what the customer says and what the agent does. It’s what the system changes along the way.

So here’s the leadership move: stop treating governance like a compliance tax. Treat it like a product feature. Roles, permissions, escalation rules, and an action trail that a human can explain without sweating through their shirt. If a customer asked, “Why did you do that?” could your team answer in plain English and still sound like the good guys?

See you tomorrow.

👥 Share This Issue

If this issue sharpened your thinking about AI in CX, share it with a colleague in customer service, digital operations, or transformation. Alignment builds advantage.

📬 Feedback & Ideas

What’s the biggest AI friction point inside your CX organization right now? Reply in one sentence — I’ll pull real-world examples into future issues.