Alexa Plus Goes Mainstream

Plus: Why “issue resolved” still does not mean “customer loyal.”

📅 February 5, 2026 | ⏱️ 4-min read

Good Morning!

Today’s issue is about a sneaky CX truth: AI can solve the problem, and still leave the customer feeling… not great. We’ve got a major consumer AI rollout, fresh data on why resolution is not loyalty, and a practical agent-assist play that keeps humans in the loop without drowning them in admin work.

The Executive Hook:

Your AI can be fast and still make customers feel trapped. The moment someone thinks, “How do I get out of this bot conversation,” you are no longer delivering service. You are delivering friction. Let’s fix the parts that actually stick in memory.

🧠 THE DEEP DIVE: Alexa Plus moves from “early access” to default behavior

The Big Picture: Amazon has rolled out Alexa Plus broadly to U.S. Prime members, while also offering a limited free tier for non-Prime users via web and app.

What’s happening:

Prime becomes the distribution engine: Alexa Plus is included with Prime in the U.S., and there’s a paid option for non-Prime users who want full access.

The assistant shifts from commands to multi-step help: It’s positioned as more conversational and capable of handling more complex tasks and planning.

This is a CX expectations reset: When customers get used to helpful, natural interaction at home, they bring that expectation to your support and service channels.

Why it matters: Consumer AI rollouts shape the baseline. Customers start expecting faster answers, better memory of context, and smoother handoffs. If your experience cannot recover cleanly when AI gets it wrong, customers will remember the failure, not the speed.

The takeaway: Do not treat “answer quality” as the finish line. Treat it as the entry fee. Your competitive advantage is how gracefully you escalate, apologize, and resolve when the AI misses.

Source: The Verge

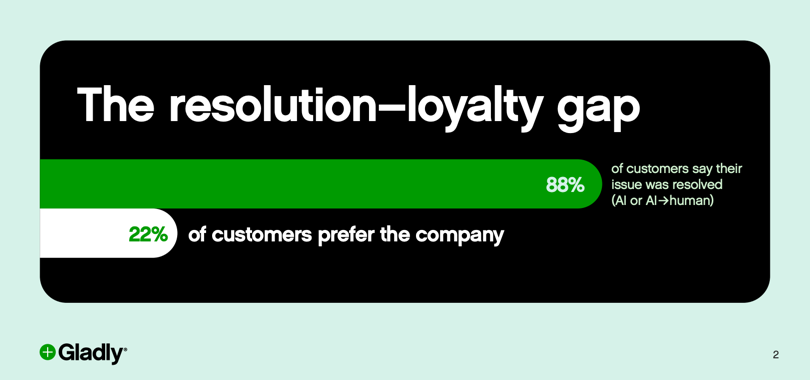

📊 CX BY THE NUMBERS: AI resolves issues, but loyalty is where it breaks

Data Source: Gladly, 2026 Customer Expectations Report

88% say their issue was resolved by AI support. Resolution is becoming table stakes.

Only 22% say the AI experience made them prefer the company afterward. That’s the loyalty gap in one punchy stat.

The implication: If you optimize only for containment and deflection, you can “win” operationally while quietly losing preference.

The Insight: Add metrics that reflect trust and recovery, not just closure. Track things like successful escalation rate, time to human when needed, and repeat contact after an AI miss. Those are the loyalty-leading indicators.

🧰 THE AI TOOLBOX: Agent Assist that helps the agent, not replaces the agent

The Tool: Humana is expanding use of Google Cloud Agent Assist to support member advocates, with broader rollout planned across service centers during 2026.

What it does: Provides real-time help during conversations, including surfacing relevant information and reducing manual wrap-up so agents can stay focused on the member.

CX Use Case:

Fewer “hold please” moments: If the system can surface the right benefit and eligibility info fast, the interaction feels confident instead of chaotic.

Cleaner continuity: Better summaries and context reduce the classic pain of customers repeating themselves across transfers and follow-ups.

Trust: In sensitive categories like healthcare, this pattern matters. AI supports the human conversation instead of trying to impersonate it. That tends to preserve empathy and accountability.

Source: Humana

⚡ SPEED ROUND: Quick Hits

Optum unveils AI-powered tools for digital prior authorization — Prior auth delays are a real-world customer pain, and shaving time off that process can feel like a service upgrade, not just back-office optimization.

Apple improves the AI Support Assistant in the Apple Support app — Support is shifting from “help articles” to guided diagnostics, and that can reduce effort if the experience stays transparent and easy to escalate.

OpenAI builds an “ads integrity” team ahead of ChatGPT advertising — If ads enter the chat experience, trust becomes a product feature. The first scammy ad moment will not be forgiven.

📡 THE SIGNAL: The handoff is the experience

AI is getting better at answers. Customers are getting used to that. What they are not getting used to is the moment the system stops being helpful and starts being a maze. In 2026, the separating line is recovery: fast escalation, clear language, and agents who feel enabled instead of policed by the tooling. If your AI cannot gracefully say “I’ve got this wrong, here’s a human, and here’s what I already know,” you are not saving time. You are spending trust.

See you tomorrow,

👥 Share This Issue

If this issue sharpened your thinking about AI in CX, share it with a colleague in customer service, digital operations, or transformation. Alignment builds advantage.

📬 Feedback & Ideas

What’s the biggest AI friction point inside your CX organization right now? Reply in one sentence — I’ll pull real-world examples into future issues.