Claude Just Learned to “Do the Work,” Not Just Talk About It

Plus: customers are feeling slightly better… but still pretty annoyed

📅 January 27, 2026 | ⏱️ 4-min read

Good Morning!

The Executive Hook: Today’s issue is all about the moment AI stops being a chatty assistant and starts doing real work inside real systems. That’s great when it helps. It’s… not great when permissions are sloppy. You’ll also get a quick pulse check on how customers are feeling right now (hint: not exactly relaxed), a tool that makes customer interviews easier to run at scale, and a few quick hits that are worth knowing before your day gets loud.

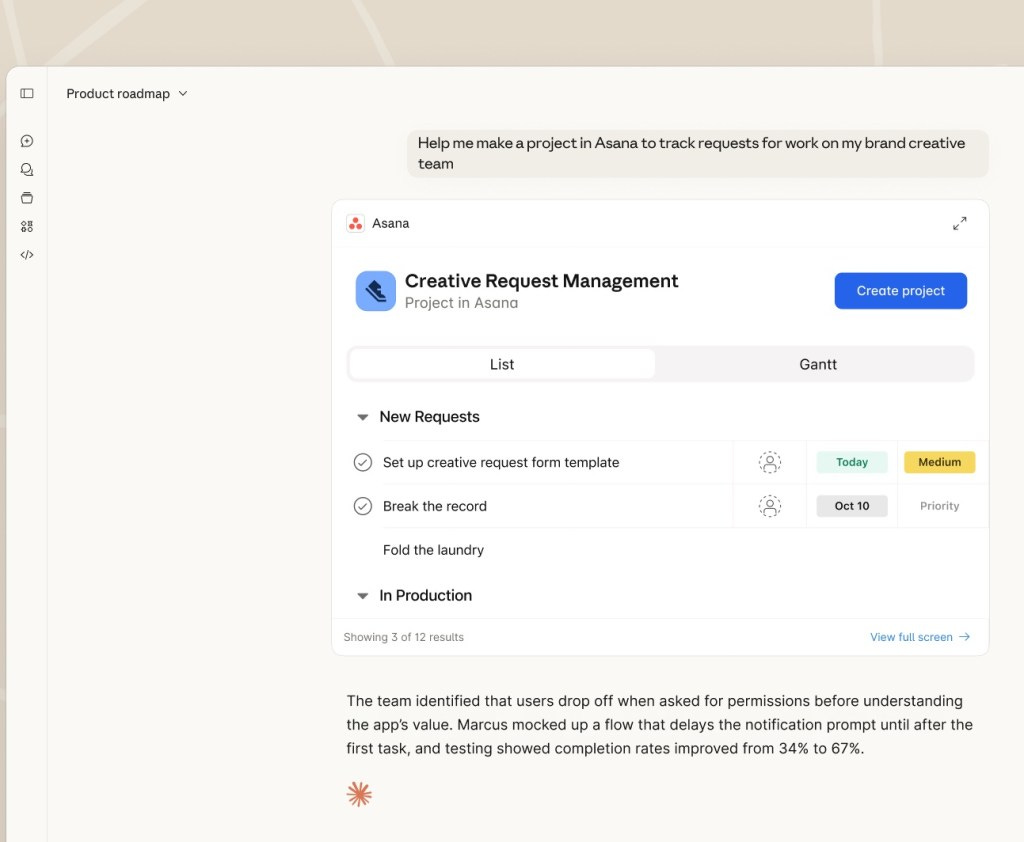

🧠 THE DEEP DIVE: Anthropic Turns Claude Into a Workplace “Doer,” Not Just a Chat Window

The Big Picture: Anthropic launched interactive apps inside Claude so users can work directly with tools like Slack, Canva, Figma, Box, and more—without leaving the chat experience.

What’s happening:

Claude can now pull up interactive app interfaces inside the chatbot, not just generate text.

Launch apps skew “workplace reality” (Slack, Canva, Figma, Box, Clay), with a Salesforce implementation expected soon.

Anthropic flags the real line in the sand: permissions. If you grant broad access, you’re basically handing AI the master keys and hoping for the best.

Why it matters: For CX leaders, this is the “AI-in-the-workflow” moment. When a bot can draft the message and send it, or pull the file and update the case, your operating model changes. Your policies can’t stop at “approved language.” You need guardrails for what the AI is allowed to do, where it can do it, and what always requires a human (especially the messy edge cases).

The takeaway: Treat AI permissions like production access: least privilege, clear roles, and tight escalation paths. If your AI can take action, your “CX quality program” just became an “AI governance program,” too.

Source: TechCrunch

📊 CX BY THE NUMBERS: Customers are a bit less gloomy… but still under pressure

Data Source: University of Michigan Surveys of Consumers

The Consumer Sentiment Index rose to 56.4 (up from 52.9 in December).

Sentiment is still more than 20% lower than a year ago.

1-year inflation expectations eased to 4.0% (lowest since January 2025), while the 5-year view dipped to 3.3%.

The Insight: Even when sentiment “improves,” customers don’t magically become patient. Pressure shows up as shorter tempers, more price sensitivity, and less tolerance for friction. For CX: this is your cue to prioritize effort reduction (clear status, fewer handoffs, faster resolution) over “delight theater.”

🧰 THE AI TOOLBOX: Perspective AI

The Tool: Perspective AI helps you run AI-moderated customer interviews at scale—then turns them into a research report you can actually use.

What it does: You prompt the platform with what you’re trying to learn (message testing, unmet needs, service experience, why people leave), and it conducts interviews and organizes findings, quotes, and next steps.

CX Use Case:

Replace “survey fatigue” with real conversations—especially for post-interaction feedback and churn reasons customers don’t type into a form.

Pressure-test tone and messaging before you roll changes into support macros, chat flows, or self-service content.

Trust: When you scale listening, you’re not just collecting data—you’re shaping what customers believe you care about. Tools that surface real customer language (and patterns behind it) make it easier to respond with empathy and consistency.

Try a sample Interview: Share Your AI Experience

Sample Research Report: The State of AI in Small Business 2025

⚡ SPEED ROUND: Quick Hits

Gartner says GenAI customer-service “cost per resolution” will top offshore human agents by 2030 — If you’re budgeting automation on the assumption it’s always cheaper than humans, your spreadsheet is about to have a long, awkward meeting.

C.H. Robinson launches AI agents to reduce missed LTL pickups — This is what “better service” looks like in the real world: fewer missed moments, faster recovery, and visibility customers don’t have to beg for.

Microsoft Teams rolls out Brand Impersonation Protection for calls (mid-Feb) — Every scam call that sounds like “you” is a trust leak; warnings like this help stop the damage before your frontline teams have to explain it.

📡 THE SIGNAL: When AI can act, your guardrails have to grow up

AI is starting to move from “helpful words” to “real actions,” and that’s the moment CX gets serious. Because when a tool can update a case, trigger a workflow, or send a message in the tools your teams live in, the blast radius gets bigger. Fast. Pair that with customers who are still feeling the pressure, and you get one clear priority for CX leaders: reduce effort, tighten handoffs, and lock down permissions like your brand depends on it, because it does.

See you tomorrow,

👥 Share This Issue

If this issue sharpened your thinking about AI in CX, share it with a colleague in customer service, digital operations, or transformation. Alignment builds advantage.

📬 Feedback & Ideas

What’s the biggest AI friction point inside your CX organization right now? Reply in one sentence — I’ll pull real-world examples into future issues.