When the Bot is “Helpful” Enough to Break the Law

Plus: Why your next customer might be an AI agent, not a human

📅 February 2, 2026 | ⏱️ 4-min read

Good Morning!

Today’s theme is simple: AI can move fast, but trust moves slower. We have a public-sector chatbot getting shut down after giving illegal guidance, a retail-and-service “single interface” play from Google Cloud, and a reminder that voice is becoming the most natural front door for support.

The Executive Hook:

If your AI experience can confidently give the wrong answer, it is not “innovation.” It is liability with a friendly tone. The next wave of CX wins will not come from who ships the flashiest bot. It will come from who builds the safest handoffs, the clearest guardrails, and the quickest shutoff switch when things go sideways.

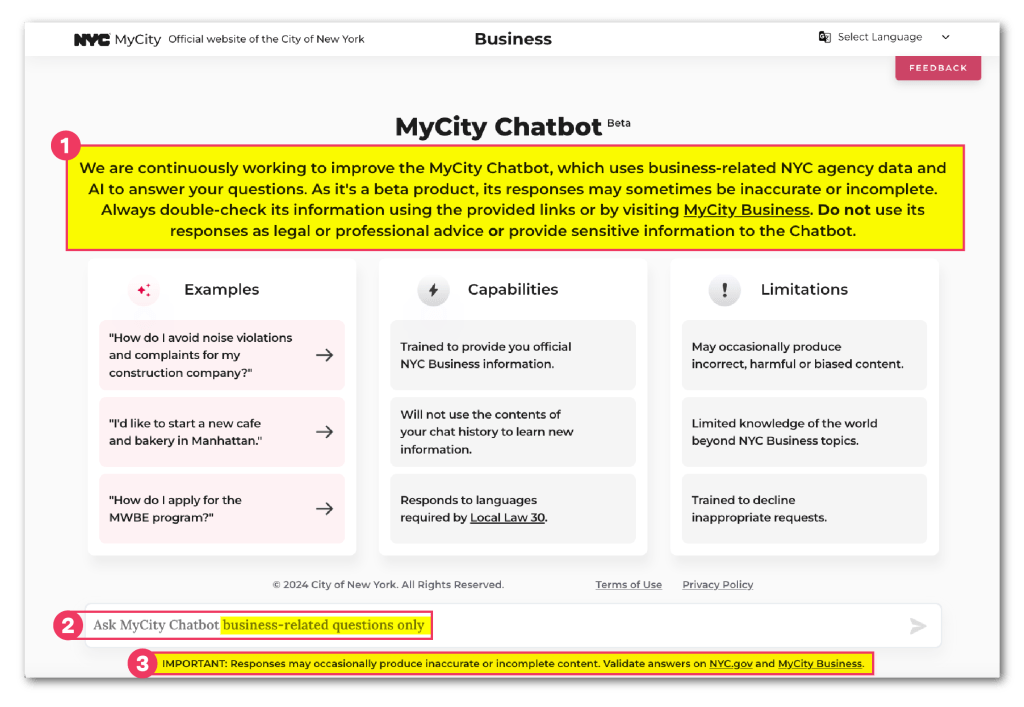

🧠 THE DEEP DIVE: New York City Moves to Pull the Plug On a Chatbot that Gave Illegal Guidance

The Big Picture: A city chatbot meant to help businesses navigate rules is being targeted for termination after it repeatedly suggested actions that would violate the law.

What’s happening:

Zohran Mamdani says the chatbot should be shut down after reporting showed it encouraged illegal behavior.

The tool was launched under Eric Adams to help businesses understand city programs and regulations.

Investigations by THE CITY and The Markup found examples like advising employers about tip practices and cash acceptance in ways that were not legal.

Why it matters:

This is the CX lesson nobody wants to learn in production. “Self-service” is only self-service if it is correct. Otherwise it becomes self-inflicted support volume, reputational damage, and a compliance headache. For CX leaders, the move that matters most here is not the shutdown. It is the implied standard: if an AI experience cannot stay inside policy and law, it should not be customer-facing.

The takeaway:

Build your AI like you build payments. Assume failure, instrument everything, and make the kill switch boringly easy to use.

Source: The City

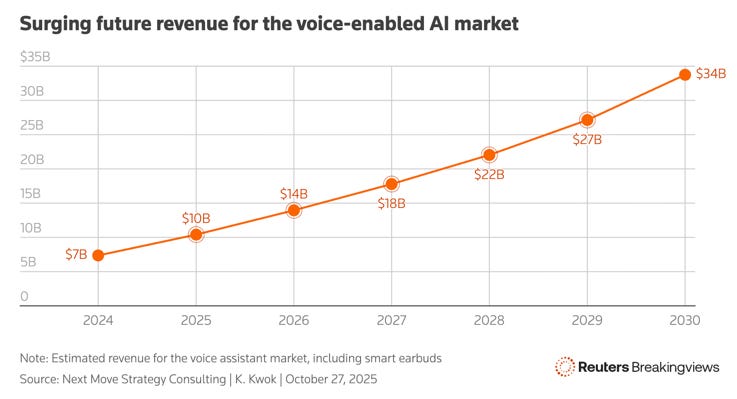

📊 CX BY THE NUMBERS: Voice is Becoming the Default Interface For Help

Data Source: Reuters Breakingviews

Venture capital firms invested $6.6B in voice AI startups in 2025, up from $4B in 2023. (Translation: the money is chasing voice-first customer experiences.)

The total voice AI market is expected to reach $34B by 2030 (more than triple from 2025), per Next Move Strategy Consulting. (Translation: voice is not a feature, it is a channel shift.)

WhatsApp users send 7B+ voice messages daily. (Translation: customers already think in voice. Your support journey should too.)

The Insight:

Voice raises the bar. When the experience is spoken, awkward flows feel more awkward. Hallucinations feel more personal. And escalations feel more urgent. If you are betting on voice agents, your “trust stack” matters as much as your model choice.

🧰 THE AI TOOLBOX: Gemini Enterprise for Customer Experience

The Tool: Google Cloud launched Gemini Enterprise for Customer Experience, positioning it as one interface that spans shopping and service.

What it does:

Helps brands deploy prebuilt, configurable agents that keep context from discovery through post-purchase support, and can take multi-step actions with customer consent.

CX Use Case:

Turn “Where’s my order?” into a single conversation that can verify status, fix a fulfillment issue, and trigger a refund or replacement (without making the customer repeat themselves).

Build support agents from existing transcripts and docs, then test and deploy faster, including multilingual experiences at scale.

Trust:

The promise is fewer handoffs and less customer effort. The risk is obvious: if the agent can act, it can also act wrong. The winning play is consented actions plus strict policy boundaries plus monitoring that catches drift early.

⚡ SPEED ROUND: Quick Hits

No humans needed: New AI platform takes industry by storm — Moltbook is a social network for agents to talk to each other, and yes, that has implications for how your brand gets “shopped” and evaluated when the customer is software.

The AI adoption story is haunted by fear as today’s efficiency programs look like tomorrow’s job cuts — Carolyn Dewar makes the case that trust and psychological safety are the real accelerators, because scared teams do safe, small pilots forever.

📡 THE SIGNAL: The kill switch is a CX feature

Every leader loves the launch moment. The mature move is what happens after. If your AI experience starts inventing policy, skipping steps, or “helpfully” breaking rules, your brand is the one speaking, not the model. Build the kill switch. Practice using it. Then build the version you can trust enough to keep on.

See you tomorrow,

👥 Share This Issue

If this issue sharpened your thinking about AI in CX, share it with a colleague in customer service, digital operations, or transformation. Alignment builds advantage.

📬 Feedback & Ideas

What’s the biggest AI friction point inside your CX organization right now? Reply in one sentence — I’ll pull real-world examples into future issues.