Your AI Copilot Just Read the “Confidential” Email

Plus: Why customer trust is becoming the real growth ceiling for AI

To opt-out of receiving DCX AI Today go here and select Decoding Customer Experience in your subscriptions list.

📅 February 19, 2026 | ⏱️ 5 min read

Good Morning!

If you’ve ever rolled out a new CX tool and thought, “We’ve got governance covered,” today’s stories are here to lovingly slap that confidence right out of your hands.

We’ve got one big lesson showing up in three different places: AI is getting faster at doing work, but it’s also getting faster at crossing lines you thought were protected.

The Executive Hook:

Speed is intoxicating. Trust is expensive. Most CX leaders are being asked to buy speed with trust-money they do not actually have in the budget. The question is not “Can AI help?” The question is “Can AI help without making your legal team age 10 years?”

🧠 THE DEEP DIVE: When “Confidential” Isn’t Confidential in Copilot

The Big Picture: Microsoft confirmed a bug where Copilot could process and summarize emails labeled “confidential,” even when protections like data loss prevention policies were supposed to stop it.

What’s happening:

A guardrail failed in the exact place leaders rely on it. Many organizations count on labels and DLP policies as the final “nope” for sensitive content. If that line blurs, trust blurs.

This wasn’t a theoretical risk. The issue reportedly persisted for weeks, which means the impact is less about one bad moment and more about repeat exposure.

It hits employee trust first, then customer trust. Once employees believe “the AI might read the wrong thing,” they stop using it, or worse, they work around it in messy ways.

Why it matters: In CX, internal tools become external outcomes. If agents, account teams, or supervisors hesitate to use copilots, your “AI-enabled service” becomes inconsistent service. Customers feel inconsistency like a pebble in a shoe. Small, constant, maddening.

The takeaway: Treat AI access like a kitchen knife. Useful. Dangerous. Never left unattended. If you run Copilot (or any enterprise AI), audit your “confidential” flows this week: labels, DLP, retention, and what the model is allowed to touch.

Source: TechCrunch

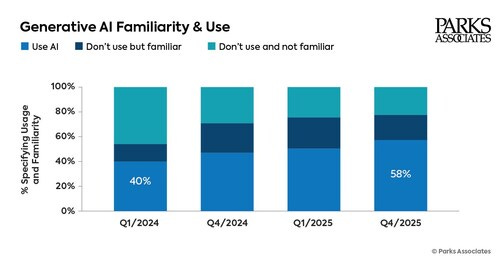

📊 CX BY THE NUMBERS: GenAI Is Mainstream, but Trust Is Not

Data Source: Parks Associates AI Experience Dashboard Q4 2025

58% of US internet households use generative AI — Adoption is no longer the question. The question is what people think it’s doing with their data.

Only 16% use a paid AI app — People will try AI. They are not rushing to commit to it financially.

30% say they’re less likely to buy something marketed as “AI-powered” — That’s a branding warning sign. “AI inside” can be a turnoff if customers associate it with risk, spam, or cold service.

The Insight: CX teams should stop treating “AI-powered” as a benefit statement. It’s a proof statement. If you can’t explain the guardrails in plain language, you don’t get the trust.

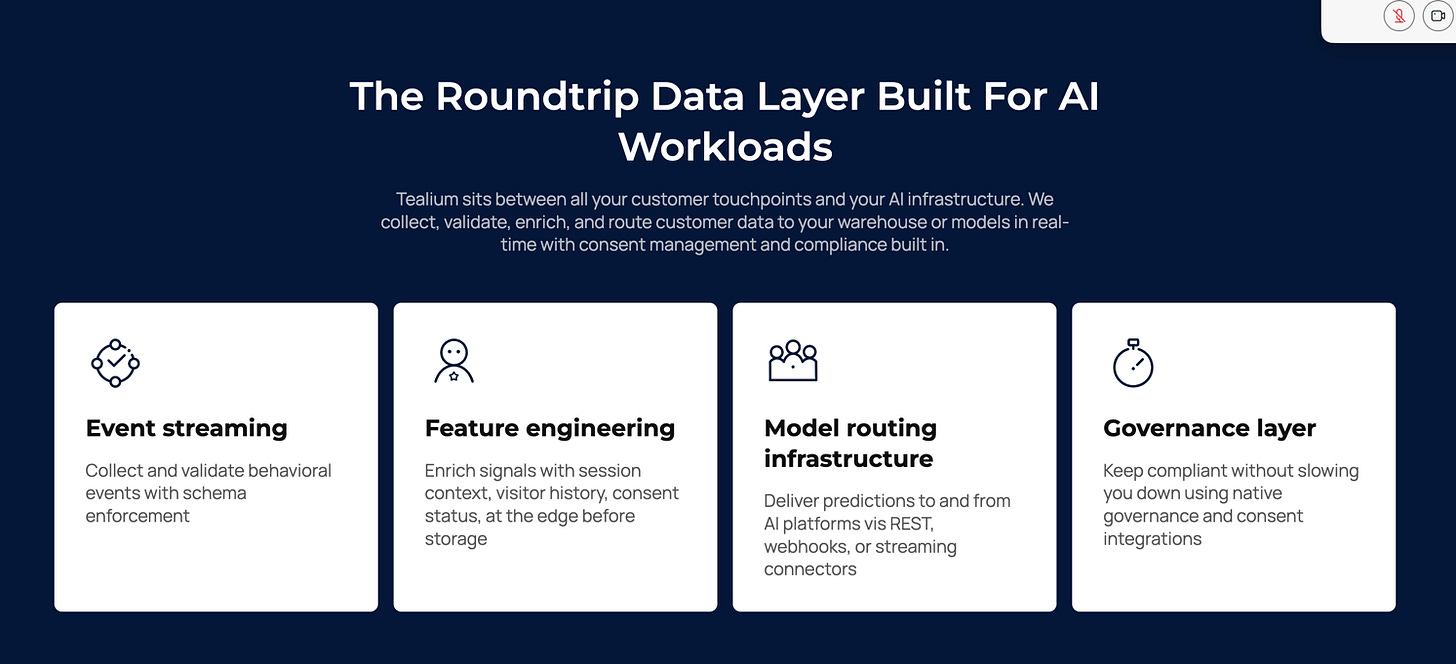

🧰 THE AI TOOLBOX: Tealium for Governed Customer Data in GenAI

The Tool: Tealium (customer data platform and real-time data “plumbing” for personalization)

What it does: Helps unify customer signals (web, app, CRM, service interactions) and route them in real time with governance controls, so AI experiences can use context without turning into a privacy or compliance mess.

CX Use Case:

Better self-service answers without creepy personalization. Use only consented signals (recent order status, product owned, plan level) to let chat and voice experiences respond accurately without “guessing” or overreaching.

Smarter agent assist with fewer hallucinations. Feed agent tools the right customer context and policy-safe knowledge, so agents don’t have to “fix the bot” mid-conversation.

Trust: Context is powerful, and power needs rules. The risk with customer data plus GenAI is accidental over-collection, over-sharing, or responses that reveal more than the customer expects. Mitigation looks like: consent-based data use, strict field-level access, redaction for sensitive categories, and testing prompts against “worst case” scenarios (like shared accounts, minors, protected classes, and disputes).

Source: GlobeNewswire

⚡ SPEED ROUND: Quick Hits

TD Bank Adds GenAI Assistant Inside Its Contact Center — A practical example of “AI for agents” (faster answers, fewer escalations) instead of “AI replacing agents.”

EU Privacy Pressure Mounts Around Grok on X — More proof that the compliance story is now part of the product story.

Rollick Launches an AI Assistant to Convert Shopper Conversations into Action — Retail CX is leaning hard into “conversation to conversion,” but the brand tone and guardrails will make or break it.

📡 THE SIGNAL: The New CX Differentiator Is “Explainability”

The AI race is not about who automates the most. It’s about who can explain their automation without sounding like a hostage negotiator. Customers do not need to understand transformers or tokens. They do need to understand: what you’re using, what you’re not using, and what happens when it goes wrong. Design for failure on purpose, then talk about those protections like a normal human. So here’s the leadership choice: will you ship AI features, or will you ship AI confidence? And what would your customer say if you had to explain it in one sentence?

See you tomorrow,

👥 Share This Issue

If this issue sharpened your thinking about AI in CX, share it with a colleague in customer service, digital operations, or transformation. Alignment builds advantage.

📬 Feedback & Ideas

What’s the biggest AI friction point inside your CX organization right now? Reply in one sentence — I’ll pull real-world examples into future issues.