Your Next “Channel” is a Blue Bubble

Plus: AI agents are sliding into the thread, and your ops better slide with them

📅 February 3, 2026 | ⏱️ 4-min read

Good Morning!

If you’ve been treating messaging as “just another channel,” today’s issue is your friendly wake-up text. Customers don’t think of iMessage or RCS as a channel. They think of it as their space. And now AI is showing up there, ready to help… or ready to get your brand blocked.

Inside today: a big move toward assistants living inside message threads, a few numbers that explain why so many AI pilots never graduate, and a practical tool you can use to build agent workflows without creating chaos for your frontline teams.

The Executive Hook:

Everyone wants “low effort” CX. Then we put customers through the classic obstacle course: logins, portals, repeated questions, and a chatbot that says “I can help” while helping exactly zero. AI can reduce effort fast. It can also create new ways to annoy people at scale. The deciding factor is not the model. It’s whether you design trust, permissions, and handoffs like grown-ups.

🧠 THE DEEP DIVE: AI agents are moving into iMessage and RCS

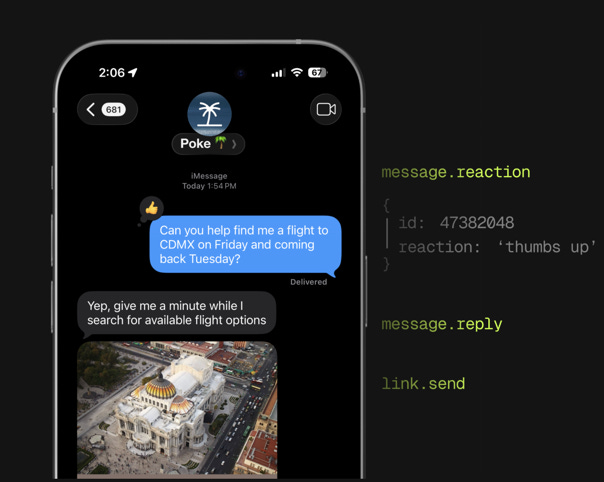

The Big Picture: Linq raised $20M to help businesses put AI assistants directly into native messaging threads like iMessage, RCS, and SMS.

What’s happening:

Linq’s pitch is infrastructure. An API layer that lets companies run assistants where customers already message, instead of forcing a separate app or web flow.

They’re leaning into the reality that more assistants are starting to live in messaging, with examples like Poke.

The subtle CX move: when an experience looks and feels “native,” customers judge it differently. It changes what they trust, what they tolerate, and how quickly they bail. (If you’ve ever ignored a sketchy business text, you know.)

Why it matters:

When service shows up in a personal message thread, the stakes go up. A mistake on a website is annoying. A mistake in someone’s messages feels intrusive. Your assistant cannot “kind of” verify identity, “sort of” explain consent, or “maybe” escalate when it’s stuck. In a thread, ambiguity reads like incompetence.

The takeaway:

Treat messaging like a first-class service surface. Before you scale, nail three things:

identity and verification

what the agent can do without asking

a human handoff that stays in the same thread

Source: TechCrunch

📊 CX BY THE NUMBERS: Agents are booming, but governance is the real unlock

Data Source: Databricks, State of AI Agents (2026 report)

Multi-agent systems grew 327% in less than four months. Translation: the “one chatbot” era is getting replaced by teams of agents doing different jobs.

Teams using evaluation tools get nearly 6x more AI projects into production. You cannot scale what you cannot measure, and “seems fine in the demo” is not a measurement.

Teams using AI governance tools get over 12x more projects into production. Governance is not paperwork. It’s how you ship without waking up to a brand fire.

The Insight:

Most teams are not stuck because the model is “not smart enough.” They’re stuck because the org is not set up to let the model do real work safely. This is classic CX, just with fancier software. Trust and control are how you move from pilot theater to actual results.

🧰 THE AI TOOLBOX: Make AI Agent (new app version)

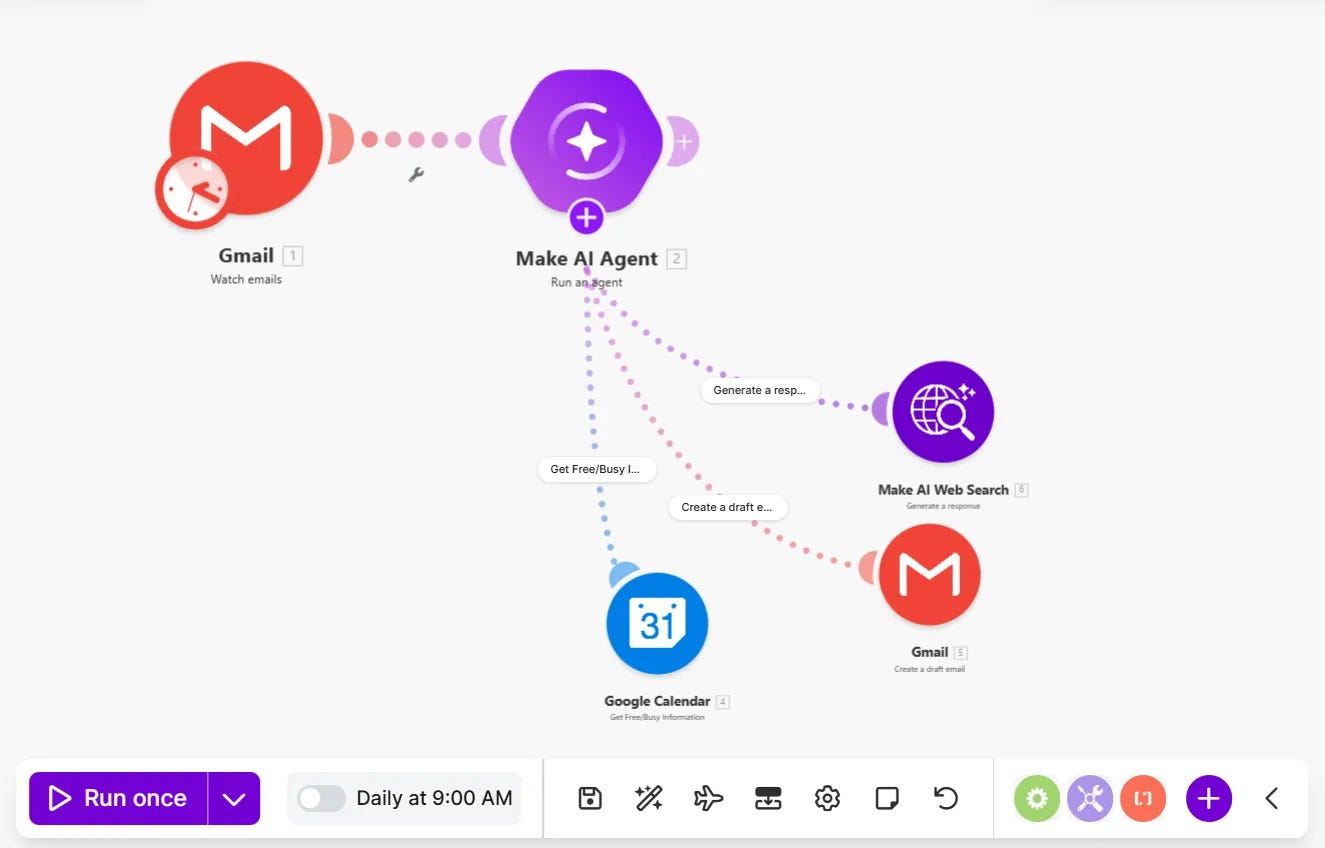

The Tool: Make shipped a new version of its AI Agent app (open beta), aimed at building and testing agent workflows inside automations.

What it does:

You configure an agent inside a workflow, connect it to tools (email, CRM, databases, MCP server tools), and inspect how it’s behaving so you can debug decisions before customers feel the consequences.

CX Use Case:

Ticket triage that respects reality: categorize, prioritize, assign, and attach context before a human touches the case. Less swivel-chair, fewer “wait, what is this about?” moments.

Post-interaction cleanup: summarize threads, tag themes, and route patterns into VoC or QA automatically. The kind of work that is important, but somehow never happens consistently.

Trust:

The best part is the implied discipline: start with bounded decisions, then expand permissions on purpose. Do not hand an agent high-stakes tasks you would not trust a smart intern to handle.

Source: Make

⚡ SPEED ROUND: Quick Hits

OpenAI launches Codex app to gain ground in AI coding race — Faster coding means faster product change. If your CX backlog is real, this can shorten the time between “we heard you” and “we fixed it.” (If your backlog is imaginary, it will not help.)

DeepL launches Voice API for real-time speech transcription and translation — Real-time translation raises the bar for “we don’t support that language.” It changes staffing options, routing strategy, and what customers will accept.

Carrier adds a generative AI “Tell Me More” feature to Abound — This is field enablement: fewer “what does this alert mean” moments, more confident action, and less tribal knowledge locked in one veteran’s head.

📡 THE SIGNAL: The thread is the new storefront

When service moves into a personal message thread, your brand stops feeling like “a company I interact with” and starts feeling like “a presence in my life.” That’s powerful. It’s also risky. The teams that handle this well won’t be the ones with the flashiest AI. They’ll be the ones who treat consent, tone, and rescue paths as part of the product, not an afterthought. Because in messaging, that stuff is not compliance. It’s the experience.

See you tomorrow,

👥 Share This Issue

If this issue sharpened your thinking about AI in CX, share it with a colleague in customer service, digital operations, or transformation. Alignment builds advantage.

📬 Feedback & Ideas

What’s the biggest AI friction point inside your CX organization right now? Reply in one sentence — I’ll pull real-world examples into future issues.